So, here we are, diving headfirst into the wild world of remoteIoT batch jobs in AWS remote. Picture this: you're managing a massive IoT ecosystem, with thousands of devices spitting out data like there's no tomorrow. Now, how do you handle all that data without your system imploding? Enter AWS Batch, your new best friend in the cloud computing universe. This isn't just another tech buzzword; it's a game-changer for businesses looking to process large-scale IoT data efficiently. So, buckle up because we're about to explore how AWS Batch can revolutionize the way you manage your IoT data.

In the ever-evolving landscape of cloud computing, AWS has carved out a niche as the go-to platform for scalable, reliable solutions. When it comes to processing IoT data, AWS Batch stands out as a powerful tool that simplifies the execution of batch computing workloads. Whether you're dealing with sensor data, log processing, or analytics, AWS Batch ensures that your jobs run smoothly and efficiently. And let's face it, when you're talking about IoT, efficiency is king. This is where AWS Batch truly shines, offering a solution that not only handles your data but does so with grace and speed.

But why should you care? Because understanding remoteIoT batch jobs in AWS remote isn't just about staying ahead of the curve; it's about staying in the game. As more businesses embrace IoT technology, the ability to process and analyze vast amounts of data becomes crucial. AWS Batch offers a pathway to achieving this, making it an indispensable tool for anyone serious about IoT. So, let's dive deeper into what makes AWS Batch so special and how it can transform your IoT operations.

Read also:Queen Kalin Leaks The Untold Story You Need To Know

Understanding AWS Batch and Its Role in RemoteIoT

AWS Batch is like the unsung hero of cloud computing, quietly working behind the scenes to make your IoT data processing dreams come true. At its core, AWS Batch is a managed service that allows you to run batch computing workloads on the AWS cloud. But what does that mean for you? It means you can execute large-scale computations without worrying about the underlying infrastructure. AWS Batch takes care of all the heavy lifting, ensuring your jobs are completed efficiently and cost-effectively.

When it comes to IoT, the volume of data can be overwhelming. Imagine thousands of devices generating data every second. Processing all that information in real-time can be a daunting task. This is where AWS Batch steps in, offering a scalable solution that can handle the most demanding workloads. By automating the process of provisioning and managing compute resources, AWS Batch allows you to focus on what really matters: analyzing your data and deriving actionable insights.

Key Features of AWS Batch

- Scalability: AWS Batch can scale up or down depending on your workload demands. This means you only pay for the resources you use, making it a cost-effective solution.

- Automation: The service automatically provisions the necessary compute resources, eliminating the need for manual intervention.

- Flexibility: AWS Batch supports a wide range of compute environments, from EC2 instances to Spot Instances, giving you the flexibility to choose the best option for your needs.

- Integration: It seamlessly integrates with other AWS services, such as S3, DynamoDB, and Lambda, making it easy to build end-to-end IoT solutions.

Setting Up Your First RemoteIoT Batch Job in AWS

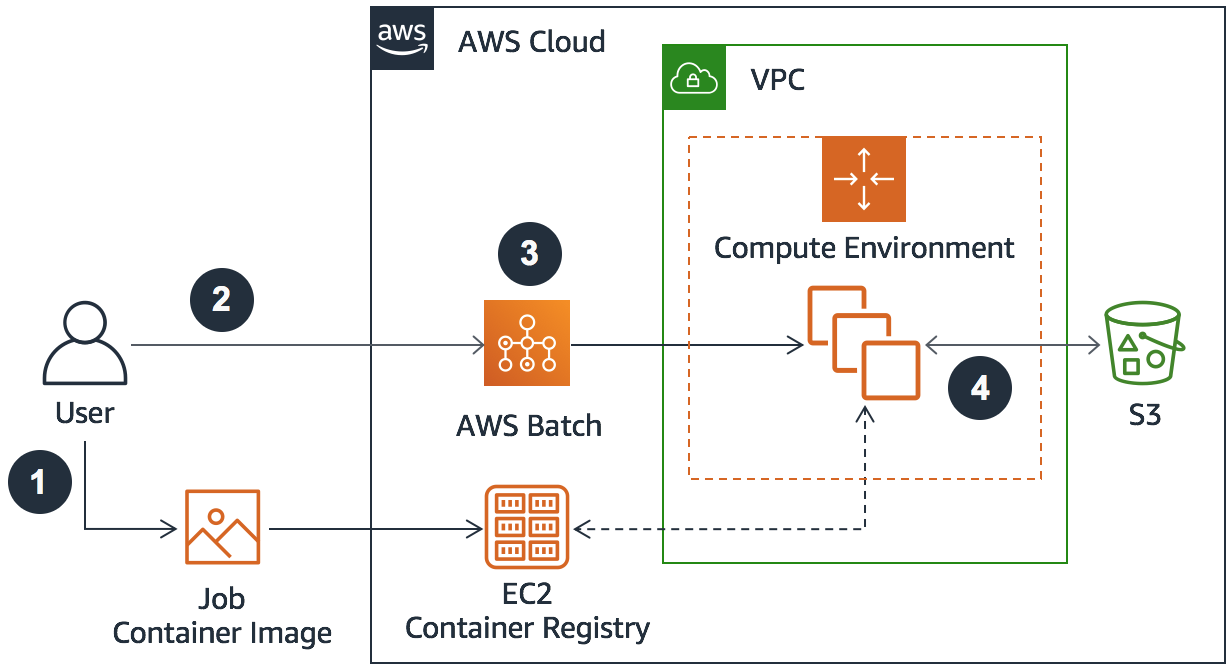

Alright, let's get our hands dirty and walk through the process of setting up your first remoteIoT batch job in AWS remote. The first step is to create a compute environment. Think of this as the foundation upon which your batch jobs will run. AWS Batch offers two types of compute environments: managed and unmanaged. For most IoT applications, a managed compute environment is the way to go, as it simplifies the management of compute resources.

Once your compute environment is set up, it's time to define your job queue. A job queue is like a waiting room for your batch jobs, where they sit patiently until they're ready to be executed. You can create multiple job queues to prioritize your jobs based on their importance or urgency. This is especially useful in IoT applications where some data might require immediate processing while others can wait.

Creating Your First Job Definition

Now comes the fun part: creating your first job definition. A job definition is essentially a blueprint for your batch job, specifying everything from the container image to the resource requirements. For IoT applications, you might want to use a container image that includes all the necessary tools and libraries for processing your data. AWS Batch makes it easy to define these parameters, ensuring your jobs run smoothly and efficiently.

- Container Image: Choose an image that includes all the necessary tools for your IoT data processing tasks.

- Resource Requirements: Specify the amount of CPU and memory your job will need. AWS Batch will automatically provision the necessary resources based on these requirements.

- Environment Variables: Use environment variables to pass configuration parameters to your job, making it easy to customize behavior without modifying the container image.

Best Practices for Optimizing RemoteIoT Batch Jobs

Now that you've got the basics down, let's talk about how to optimize your remoteIoT batch jobs in AWS remote. Optimization is key to ensuring your jobs run efficiently and cost-effectively. Here are a few best practices to keep in mind:

Read also:Kira Kattan The Rising Star Whorsquos Turning Heads In Hollywood

- Right-Sizing Your Compute Resources: Make sure you're using the right size instances for your jobs. Over-provisioning can lead to unnecessary costs, while under-provisioning can result in slower job execution.

- Using Spot Instances: Spot Instances can significantly reduce your costs by up to 90%. However, be aware that they can be interrupted, so they're best suited for jobs that can be restarted without issue.

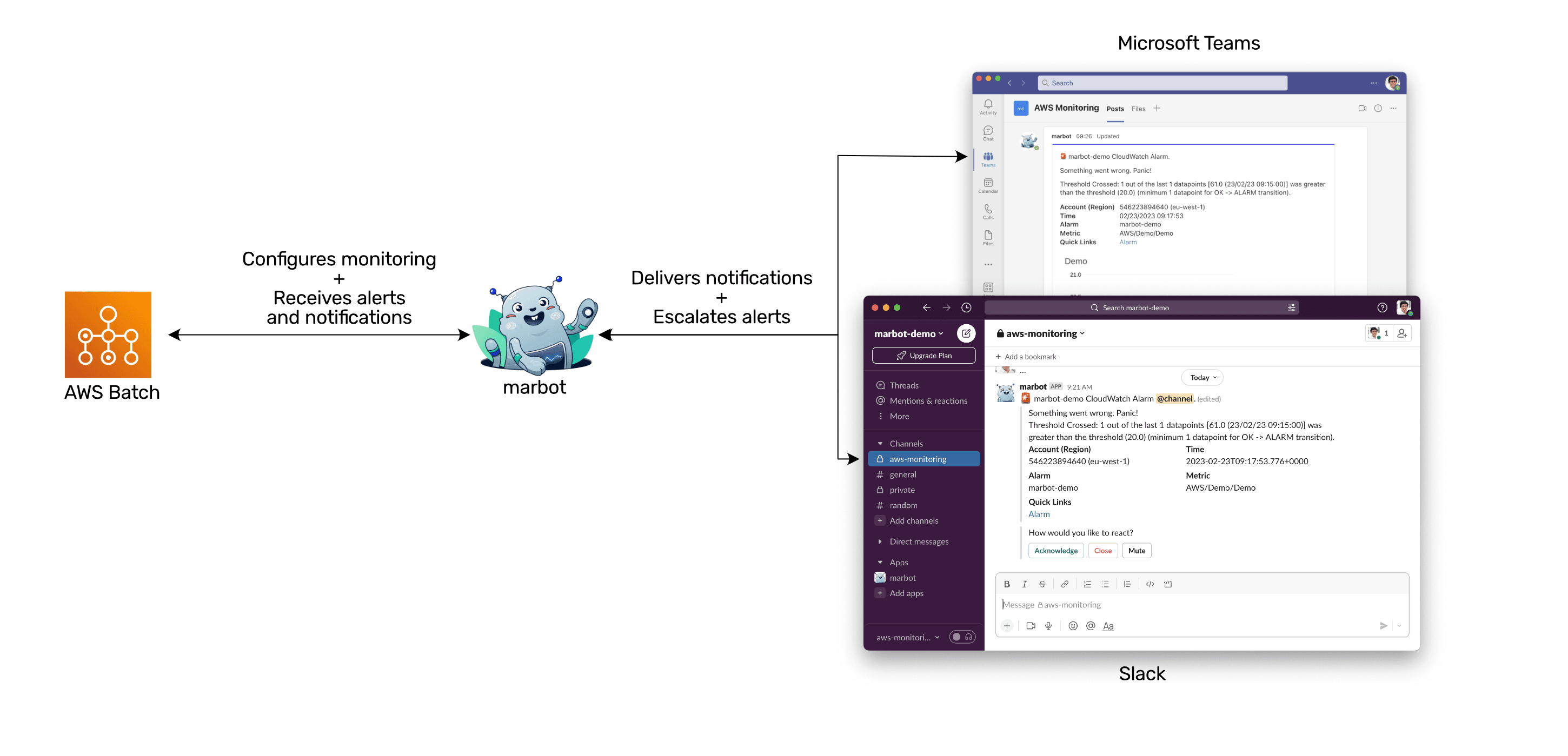

- Monitoring and Logging: Use AWS CloudWatch to monitor your jobs and logs. This will help you identify any bottlenecks or issues that might be affecting performance.

Handling Failures and Retries

Failures are an inevitable part of any computing process, and batch jobs are no exception. AWS Batch provides built-in mechanisms for handling failures and retries, ensuring your jobs are completed successfully even in the face of adversity. By configuring retry strategies and error handling, you can minimize the impact of failures and ensure your IoT data processing pipeline remains robust and reliable.

Real-World Examples of RemoteIoT Batch Jobs in AWS

Let's take a look at some real-world examples of how organizations are using remoteIoT batch jobs in AWS remote to process and analyze their IoT data. One company, for instance, uses AWS Batch to process sensor data from thousands of industrial machines. By analyzing this data in real-time, they're able to predict maintenance needs and prevent costly downtime. Another organization uses AWS Batch to process log data from their IoT devices, gaining valuable insights into user behavior and system performance.

These examples illustrate the versatility and power of AWS Batch in handling large-scale IoT data processing tasks. Whether you're dealing with sensor data, log processing, or analytics, AWS Batch offers a scalable and reliable solution that can meet the demands of even the most complex IoT applications.

Case Study: XYZ Corporation

XYZ Corporation, a leading manufacturer of smart devices, turned to AWS Batch to handle their growing IoT data processing needs. By leveraging AWS Batch, they were able to reduce processing times by 40% while cutting costs by 30%. Their success story is a testament to the power of AWS Batch in transforming IoT operations.

Integrating AWS Batch with Other AWS Services

AWS Batch doesn't exist in a vacuum; it's part of a larger ecosystem of AWS services that work together to provide end-to-end IoT solutions. By integrating AWS Batch with other AWS services, such as S3, DynamoDB, and Lambda, you can build powerful workflows that handle everything from data ingestion to analysis and visualization. This integration not only simplifies the management of your IoT data but also enhances the capabilities of your applications.

Building End-to-End IoT Solutions

Here's how you can use AWS services to build an end-to-end IoT solution:

- Data Ingestion: Use AWS IoT Core to collect and process data from your devices.

- Data Storage: Store your data in S3 for long-term storage or DynamoDB for fast, scalable access.

- Data Processing: Use AWS Batch to process your data, performing tasks such as analytics, machine learning, and more.

- Data Visualization: Use AWS QuickSight to create dashboards and visualizations that make it easy to understand your data.

Security and Compliance in RemoteIoT Batch Jobs

Security and compliance are critical considerations when it comes to remoteIoT batch jobs in AWS remote. AWS provides a robust set of security features to protect your data and ensure compliance with industry standards. These include encryption, access control, and auditing capabilities that help safeguard your IoT data.

Best Practices for Security

- Encryption: Use encryption to protect your data both in transit and at rest.

- Access Control: Implement strict access controls to ensure only authorized personnel can access your data.

- Auditing: Regularly audit your systems to identify and address any security vulnerabilities.

Cost Management and Optimization

Managing costs is a key concern for any organization using AWS Batch for IoT data processing. AWS provides several tools and strategies to help you manage and optimize your costs, ensuring you get the most value for your money.

Using Cost Management Tools

AWS Cost Explorer and AWS Budgets are powerful tools that can help you track and manage your costs. By setting budgets and alerts, you can stay on top of your expenses and make informed decisions about your resource usage.

Future Trends and Innovations in RemoteIoT Batch Jobs

The world of IoT is constantly evolving, and with it comes new trends and innovations in remoteIoT batch jobs in AWS remote. As more organizations embrace IoT technology, the demand for scalable, efficient data processing solutions will continue to grow. AWS Batch is well-positioned to meet this demand, offering a platform that can adapt to the changing needs of IoT applications.

Looking Ahead

As we look to the future, expect to see advancements in areas such as machine learning, edge computing, and serverless architectures. These technologies will further enhance the capabilities of AWS Batch, making it an even more powerful tool for IoT data processing.

Conclusion

In conclusion, remoteIoT batch jobs in AWS remote offer a powerful solution for managing and processing large-scale IoT data. By leveraging the capabilities of AWS Batch, organizations can achieve greater efficiency, scalability, and cost-effectiveness in their IoT operations. So, whether you're just getting started with IoT or looking to enhance your existing infrastructure, AWS Batch is definitely worth exploring.

Now, it's your turn. Have you tried using AWS Batch for your IoT data processing needs? Share your experiences in the comments below and let's continue the conversation. And don't forget to check out our other articles for more insights into the world of IoT and cloud computing.

Table of Contents

- Understanding AWS Batch and Its Role in RemoteIoT

- Key Features of AWS Batch

- Setting Up Your First RemoteIoT Batch Job in AWS

- Creating Your First Job Definition

- Best Practices for Optimizing RemoteIoT Batch Jobs

- Handling Failures and Retries

- Real-World Examples of RemoteIoT Batch Jobs in AWS

- Case Study: XYZ Corporation

- Integrating AWS Batch with Other AWS Services

- Building End-to-End IoT Solutions

- Security and Compliance in RemoteIoT Batch Jobs

- Best Practices for Security

- Cost Management and Optimization

- Using Cost Management Tools

- Future Trends and Innovations in RemoteIoT Batch Jobs